Recent advances in speech enhancement (SE) have moved beyond traditional mask or signal prediction methods, turning instead to pre-trained audio models for richer, more transferable features. These models, such as WavLM, extract meaningful audio embeddings that enhance the performance of SE. Some approaches use these embeddings to predict masks or combine them with spectral data for better accuracy. Others explore generative techniques, using neural vocoders to reconstruct clean speech directly from noisy embeddings. While effective, these methods often involve freezing pre-trained models or require extensive fine-tuning, which limits adaptability and increases computational costs, making transfer to other tasks more difficult.

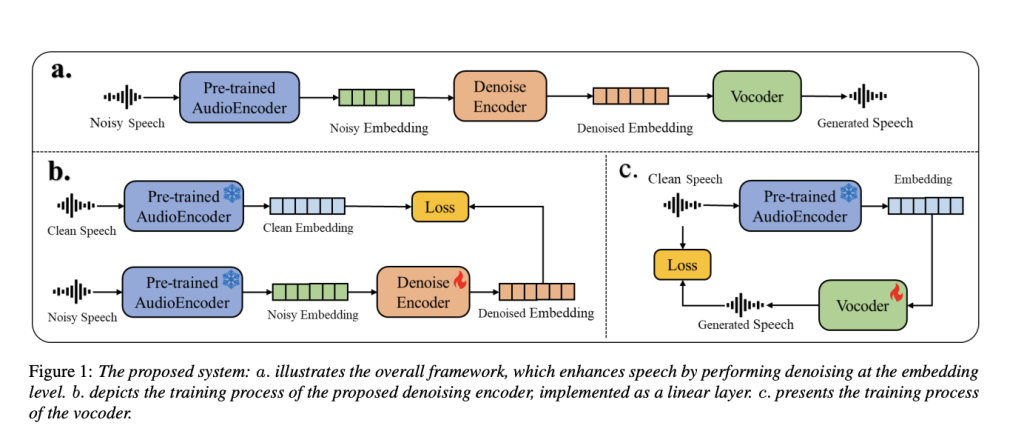

Researchers at MiLM Plus, Xiaomi Inc., present a lightweight and flexible SE method that uses pre-trained models. First, audio embeddings are extracted from noisy speech using a frozen audioencoder. These are then cleaned by a small denoise encoder and passed to a vocoder to generate clean speech. Unlike task-specific models, both the audioencoder and vocoder are pre-trained separately, making the system adaptable to tasks like dereverberation or separation. Experiments have shown that generative models outperform discriminative ones in terms of speech quality and speaker fidelity. Despite its simplicity, the system is highly efficient and even surpasses a leading SE model in listening tests.

The proposed speech enhancement system is divided into three main components. First, noisy speech is passed through a pre-trained audioencoder, which generates noisy audio embeddings. A denoise encoder then refines these embeddings to produce cleaner versions, which are finally converted back into speech by a vocoder. While the denoise encoder and vocoder are trained separately, they both rely on the same frozen, pre-trained audioencoder. During training, the denoise encoder minimizes the difference between noisy and clean embeddings, both of which are generated in parallel from paired speech samples, using a Mean Squared Error loss. This encoder is built using a ViT architecture with standard activation and normalization layers.

For the vocoder, training is done in a self-supervised way using clean speech data alone. The vocoder learns to reconstruct speech waveforms from audio embeddings by predicting Fourier spectral coefficients, which are converted back to audio through the inverse short-time Fourier transform. It adopts a slightly modified version of the Vocos framework, tailored to accommodate various audioencoders. A Generative Adversarial Network (GAN) setup is employed, where the generator is based on ConvNeXt, and the discriminators include both multi-period and multi-resolution types. The training also incorporates adversarial, reconstruction, and feature matching losses. Importantly, throughout the process, the audioencoder remains unchanged, using weights from publicly available models.

The evaluation demonstrated that generative audioencoders, such as Dasheng, consistently outperformed discriminative ones. On the DNS1 dataset, Dasheng achieved a speaker similarity score of 0.881, whereas WavLM and Whisper scored 0.486 and 0.489, respectively. In terms of speech quality, non-intrusive metrics like DNSMOS and NISQAv2 indicated notable improvements, even with smaller denoise encoders. For instance, ViT3 reached a DNSMOS of 4.03 and a NISQAv2 score of 4.41. Subjective listening tests involving 17 participants showed that Dasheng produced a Mean Opinion Score (MOS) of 3.87, surpassing Demucs at 3.11 and LMS at 2.98, highlighting its strong perceptual performance.

In conclusion, the study presents a practical and adaptable speech enhancement system that relies on pre-trained generative audioencoders and vocoders, avoiding the need for full model fine-tuning. By denoising audio embeddings using a lightweight encoder and reconstructing speech with a pre-trained vocoder, the system achieves both computational efficiency and strong performance. Evaluations show that generative audioencoders significantly outperform discriminative ones in terms of speech quality and speaker fidelity. The compact denoise encoder maintains high perceptual quality even with fewer parameters. Subjective listening tests further confirm that this method delivers better perceptual clarity than an existing state-of-the-art model, highlighting its effectiveness and versatility.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Ready to connect with 1 Million+ AI Devs/Engineers/Researchers? See how NVIDIA, LG AI Research, and top AI companies leverage MarkTechPost to reach their target audience [Learn More]

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.